Self-Regulating Generative AI for Scientific Imaging

Table of Contents

Generative AI is famous for its creativity—but in scientific imaging, creativity is a liability. When an AI “hallucinates” a detail that isn’t there, it compromises the integrity of physical measurements. This is the exact problem we addressed in our latest research, DIFFRACT, accepted at AIM@ICCV 2025.

The Challenge: Beam-Sensitive Materials

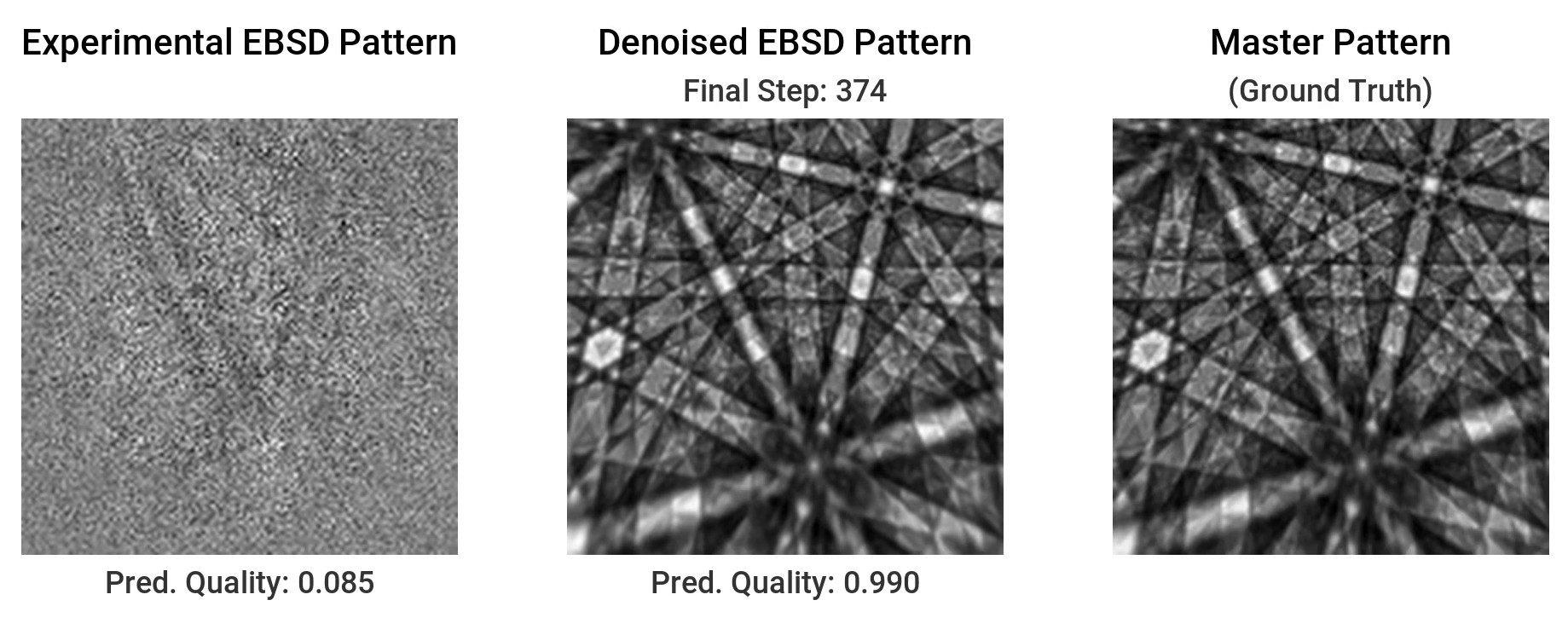

Electron Backscatter Diffraction (EBSD) is vital for revealing nanoscale structures in materials. However, emerging materials for solar energy (like hybrid perovskites) are “beam-sensitive” - they degrade rapidly under the electron microscope. To capture them, scientists must use ultra-low radiation doses, resulting in patterns buried under heavy noise. Standard denoising fails here, and standard generative models often invent crystal patterns where none exist.

The Innovation: Adaptive, Feedback-Driven Diffusion

To the best of our knowledge, DIFFRACT is the first successful application of diffusion models to EBSD pattern restoration.

Unlike standard “black box” diffusion models that run a fixed number of steps, we introduced a novel adaptive, feedback-driven scheduling mechanism.

- Self-Regulation: At each denoising step, the model estimates the current image quality.

- Dynamic Control: It uses this feedback to guide subsequent steps and, crucially, decides exactly when to stop.

This allows the system to distinguish real signal from noise, effectively preventing the “hallucinations” common in generative approaches.

Broader Impact

While designed for EBSD, this architecture represents a step forward for scientific imaging as a whole. We have turned a generative process into a precisly controlled tool, unlocking the ability to study unstable materials that were previously challenging to detailed analysis.